Making sitemap files has never been so easy!

The GSiteCrawler is available for free and runs under Windows - all you need is an internet connection and the desire to make the most out of your website!

Do you only need a quick sitemap file? Just follow the steps in the integrated wizard and you'll have the sitemap file on your server in no time!

Are you looking for more than just a sitemap?

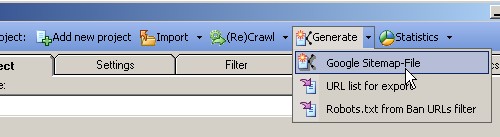

The program also offers tons of options, settings, tweaks, and more - if you want to do more than generate just a simple sitemap file. How about a urllist-file for Yahoo? an RSS feed? a ROR file? a HTML sitemap page? It's all possible with the GSiteCrawler!

GSiteCrawler Features

In general, the GSiteCrawler will take a listing of your websites URLs, let you edit the settings and generate Google Sitemap files. However, the GSiteCrawler is very flexible and allows you to do a whole lot more than "just" that!

Capture URLs for your site using

- a normal website crawl - emulating a Googlebot, looking for all links and pages within your website

- an import of an existing Google Sitemap file

- an import of a server log file

- an import of any text file with URLs in it

- does a text-based crawl of each page, even finding URLs in javascript

- respects your robots.txt file

- respects robots meta tags for index / follow

- can run up to 15 times in parallel

- can be throttled with a user defined wait-time between URLs

- can be controlled with filters, bans, automatic URL modifications

With each page, it

- checks date (from the server of using a date meta-tag) and size of the page

- checks title, description and keyword tags

- keeps track of the time required to download and crawl the page

Once the pages are in the database, you can

- modify Google Sitemap settings like "priority" and "change frequency"

- search for pages by URL parts, title, description or keywords tags

- filter pages based on custom criteria - adjust their settings globally

- edit, add and delete pages manually

- a Google Sitemap file in XML format (of course :-)) - with or without the optional attributes like "change date", "priority" or "change frequency"

- a text URL listing for other programs (or for use as a UrlList for Yahoo!)

- a simple RSS feed

- Excel / CSV files with URLs, settings and attributes like title, description, keywords

- a Google Base Bulk-Import file

- a ROR (Resources of Resources) XML file

- a static HTML sitemap file (with relative or absolute paths)

- a new robots.txt file based on your chosen filters

- ... or almost any type of file you want - the export function uses a user-adjustable text-based template-system

- a general site overview with the number of URLs (total, crawlable, still in queue), oldest URLs, etc

- a listing of all broken URLs linked in your site (or otherwise not-accessable URLs from the crawl)

- an overview of your sites speed with the largest pages, slowest pages by total download time or download speed (unusually server-intensive pages), and those with the most processing time (many links)

- an overview of URLs leading to "duplicate content" - with the option of automatically disabling those pages for the Google Sitemap file

- It can run on just about any Windows version from Windows 95b on up (tested on Windows Vista beta 1 and all server versions).

- It can use local MS-Access databases for re-use with other tools

- It can also use SQL-Server or MSDE databases for larger sites (requires a seperate installation file).

- It can be run in a network environment, splitting crawlers over multiple computers - sharing the same database (for both Access and SQL-Server).

- It can be run automated, either locally on the server or on a remote workstation with automatic FTP upload of the sitemap file.

- It tests for and recognizes non-standard file-not-found pages (without HTTP result code 404).

GSiteCrawler Downloads

- You can currently download the GSiteCrawler for free!

The following files are available for download (all installations are in English and German - the links are opened in a new window / tab):

Full installation

- Full installation, 12/28/2007

Be sure to download the current update as well.

Updates

- Update alone, 12/28/2007

Please check the beta-update for a newer version!

- Beta-Update, 12/28/2007

- Update for SQL-Server/MSDE, 12/28/2007

.

We list all changes (for the beta-version and the normal version) in our change-log.

Please do not link to the download files - the link will change depending on which version is currently available. Thanks!

Which files do I need?

If you are installing this program for the first time, you will need the full installation plus any applicable update, should it be newer than the full installation.

If you already have the GSiteCrawler installed, you'll only need to install the update. If you want to use the cutting-edge, newest version, you can use the beta-version. The program checks for updates on start and will inform you of matching updates. If you have the beta-version intsalled, it will also inform you of all new beta-version updates.

If you have a large website the MSDE / SQL-Server version might be for you. You'll need a normal full installation plus the update for the SQL-version. This version installs as a separate executable file (GSiteCrawler2.exe) and requires some work before you can use it. I recommend starting with the default version and possibly upgrading to the SQL-version if size is an issue.

Why do the installations expire?

Since new versions contain all sorts of fixes (and of course new functions) I prefer to have as many of the users as possible working with them. The expiration dates are usually well within the usual update-cycle.

No comments:

Post a Comment